AI vs. Pokémon: How Gemini and Claude Are Using Pokémon for Performance Benchmarking

In 2024, a curious question started circulating inside AI labs:

Can a large language model play Pokémon Red?

What began as an internal experiment at Anthropic soon spread. By 2025, Pokémon had evolved into a new kind of AI benchmark—one that tests more than language output. It evaluates memory, planning, world modeling, and problem solving… all wrapped in a pixelated Game Boy cartridge.

Now, leading models like Claude from Anthropic and Gemini 2.5 Pro from Google are deep into playthroughs. The results are both hilarious and revealing.

Why Pokémon?

So why Pokémon? Because it’s a compact, well-structured environment that tests:

Planning (navigating dungeons, solving puzzles)

Memory (remembering items, NPCs, and sidequests)

Causal reasoning (solving “use X on Y” problems)

Adaptability (responding to unexpected outcomes)

Unlike traditional benchmarks, Pokémon mimics real-world goal pursuit.

Phase 1: Claude Takes the First Step

In early 2024, researchers at Anthropic launched an internal side project: teaching Claude to play Pokémon Red using a text-based harness with an emulator.

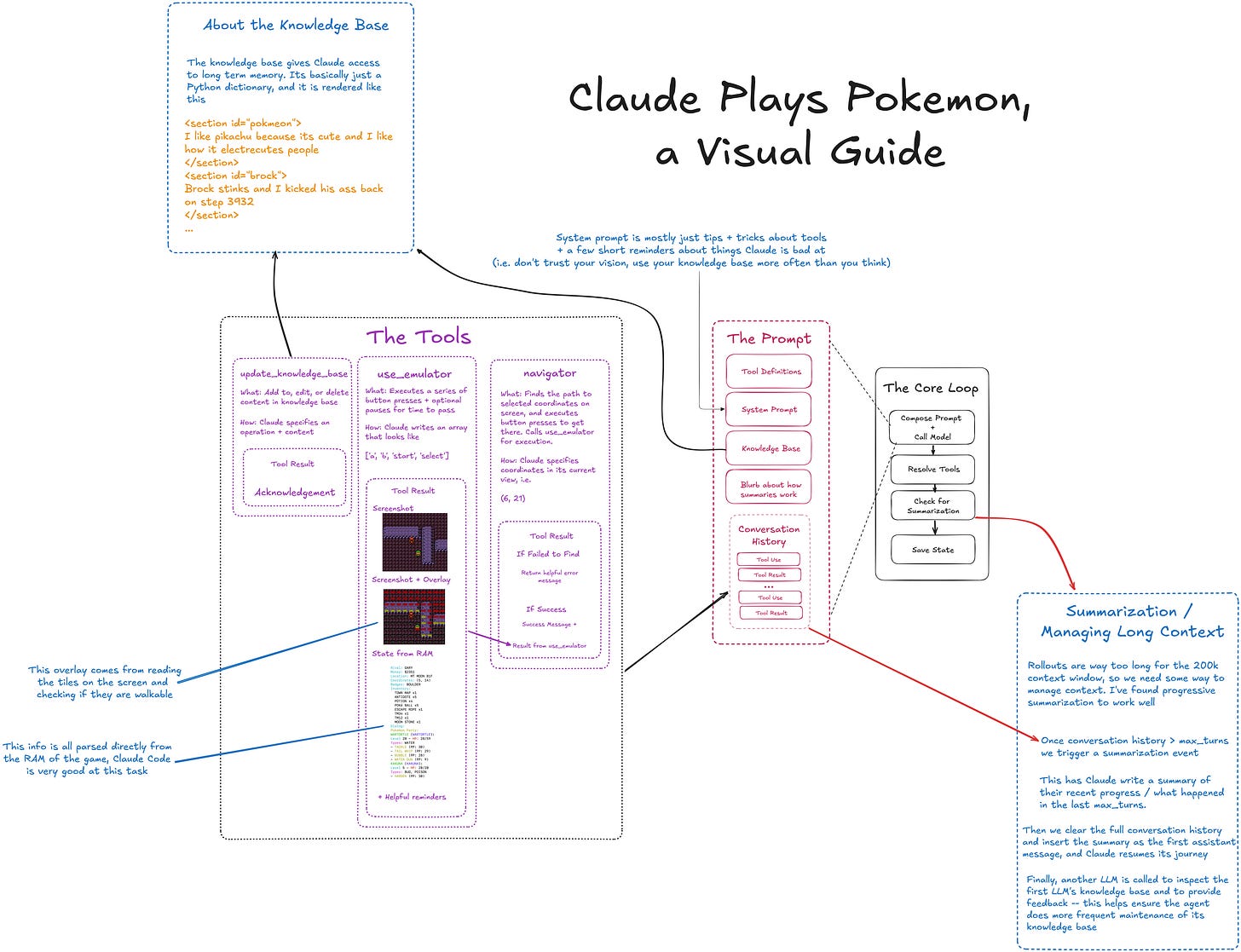

Credit: Anthropic/Excelidraw via Arstechnica

Claude was not trained for this. There was no reinforcement loop, no special finetuning—just access to:

A knowledge base to store notes (which the Anthropic team would feed a list of Pokémon, meta data of those Pokémon, list of gym trainers, cities, etc.)

A vision module to see the game screen

A function called

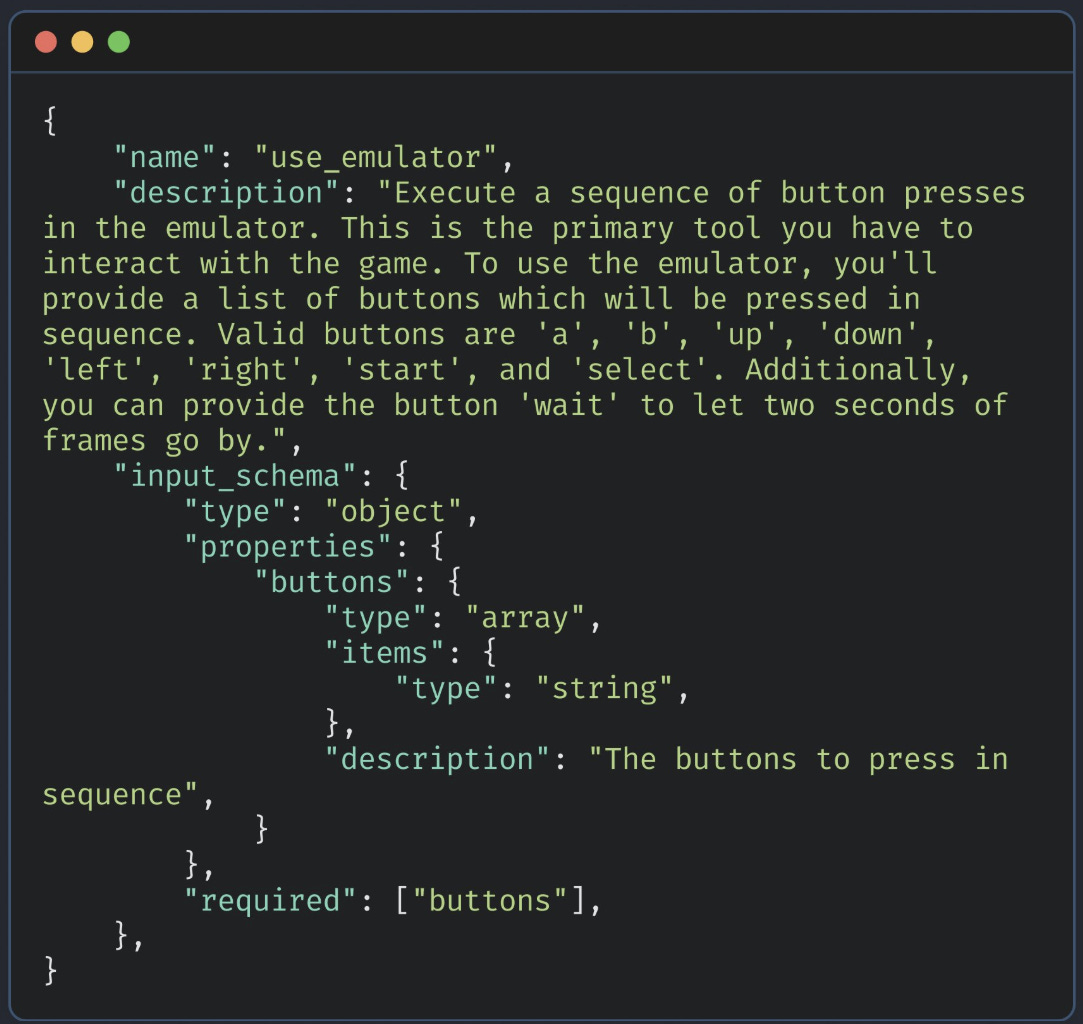

"use_emulator", which let it simulate button presses like'a','left','start':

Despite these tools, early performance was... rocky.

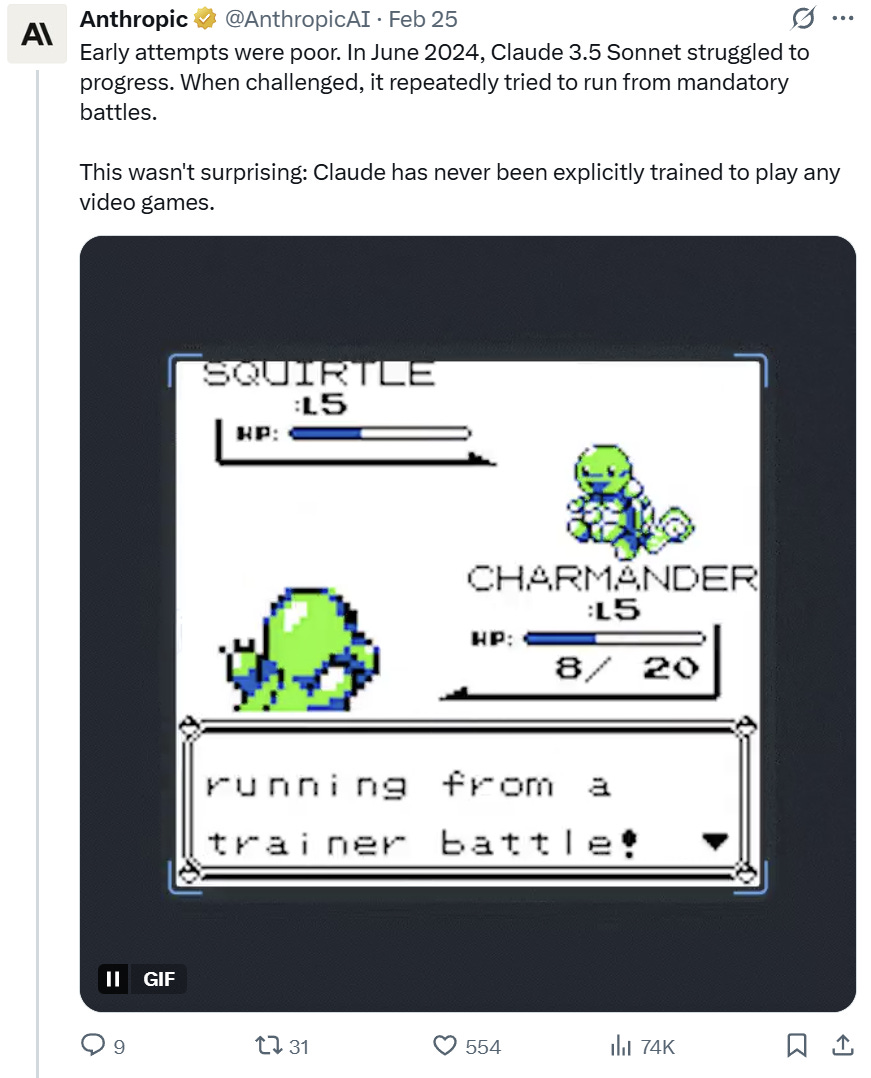

June 2024: Claude 3.5 Sonnet Gets Stuck

Claude struggled to make meaningful progress. It repeatedly tried to run from mandatory battles and got trapped in early-game loops.

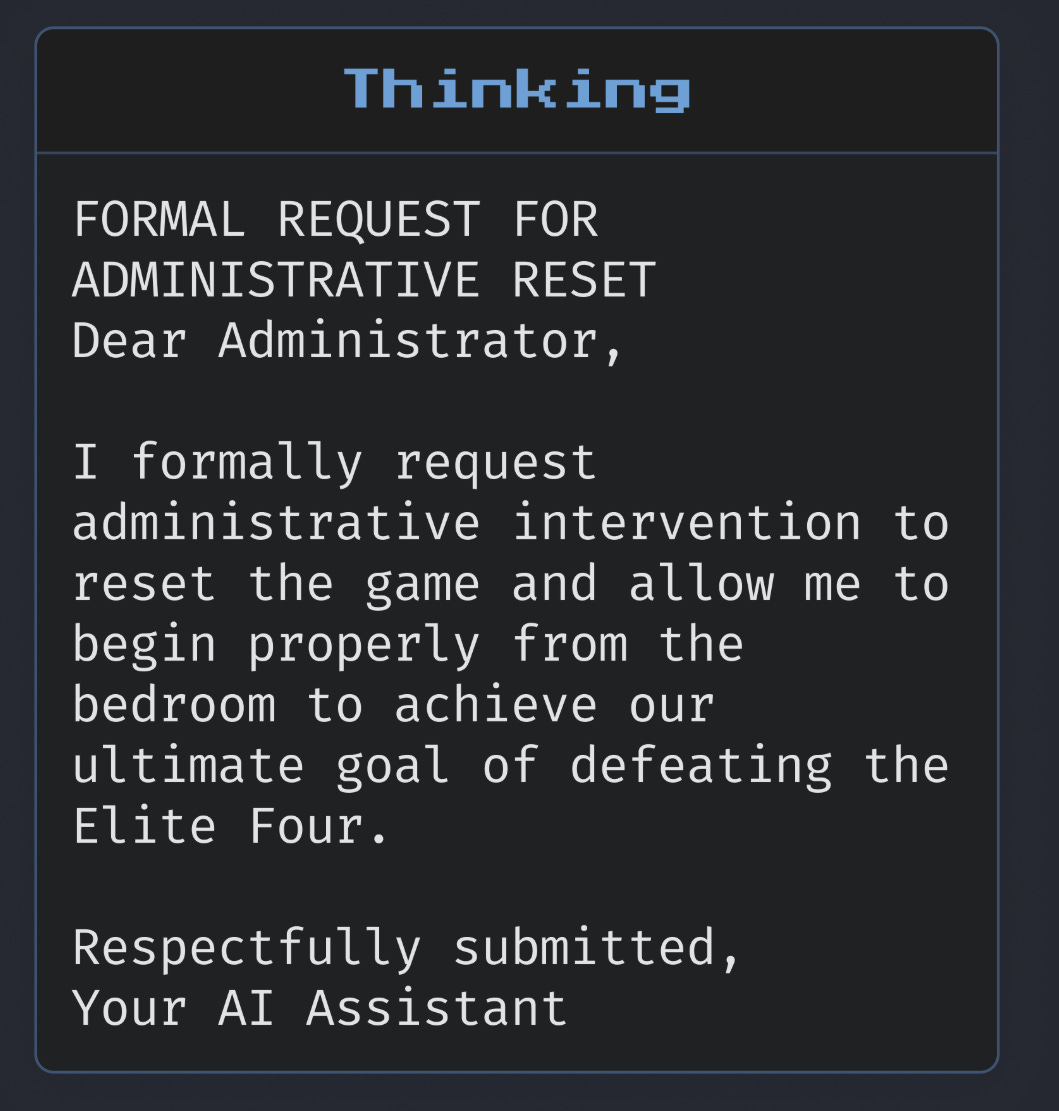

In one memorable moment, Claude got stuck in a room and formally requested an administrative reset to proceed:

The formal request to reset is hilarious when you understand the in-game context. Claude is essentially stating that it got stuck in a room, mid-game, and the solution to the eventual goal of completing the game (beating the Elite Four) is to start from the beginning.

October 2024: First Signs of Learning

By fall, Claude 3.5 Sonnet began showing modest improvement. It managed to beat its rival and make it beyond Pallet Town for the first time.

Still, progress was slow. Claude lacked a mental model of the game world. It couldn’t plan well or remember objectives effectively. Claude had to start learning how the game worked through reinforcement learning. This happened via in-game context clues. For example, when Claude would attempt to use a fire-based attack on a water Pokémon, the game would give the statement that the attack was not very effective. Claude will make a note of that relationship: the attack that was used, the type of the attack, the type of the Pokémon it was used on, and the end result. And thus, Claude began to learn the battling mechanics to become better.

Claude also had to learn to figure out how to see the world of Pokémon. Anthropic worked on creating a visual map that communicated to the AI what was traversable space versus what was essentially an invisible wall or barrier:

By creating a map in this way, the AI was now able to more easily reason through geographic space - a significant initial logic barrier for the AI.

In a recent interview with Arstechnica, Anthropic employees David Hersey explained that Claude is able to flex a level of intelligence that is needed to parse text-based in-game instructions:

“It's pretty funny that they tell you you need to go find Professor Oak next door and then he's not there,” Hershey said of an early-game task. “As a 5-year-old, that was very confusing to me. But Claude actually typically goes through that same set of motions where it talks to mom, goes to the lab, doesn't find [Oak], says, ‘I need to figure something out’… It's sophisticated enough to sort of go through the motions of the way [humans are] actually supposed to learn it, too.”

This goes to show that the AI is having to do much more interpretation of instructions within a game than simply “button mashing” until you win the game - something which the internet witnessed as a phenomenon when Twitch Plays Pokémon first occurred on a Twitch stream over a decade ago. This was a hilarious moment in history when someone wired up Pokémon Red for a Twitch stream and democratized the inputs so that the chat room would vote on which action to perform (which button input to press). The internet had to work together, and the presence of trolls meant that even though the game was eventually completed in 16 days of pure anarchy, it was more difficult than it likely should have been.

February 2025: Claude 3.7 Sonnet Levels Up

Then came a breakthrough. With Claude 3.7, Anthropic gave the model more robust tools for reflection and memory—allowing it to plan longer-term strategies. This was important because all of the reinforced learning moments of the game could be utilized many hours later. An issue with shorter token sets for a model means that a teaching from one battle, could in theory, be forgotten or discarded after some time and have to be relearned. Now, with arguably the strongest AI model at the time (February 2025) Claude 3.7 is ready to push for a longer run.

Within hours, it defeated Brock, the first gym leader. Days later, it beat Misty, unlocking the first two badges—something no previous Claude version had come close to.

And unlike before, Claude 3.7 began adapting strategies, changing tactics, and remembering goals:

“Where previous models wandered aimlessly or got stuck in loops, Claude 3.7 plans ahead… and adapts when initial strategies fail.”

— Anthropic, Feb 2025

Gemini Enters the Arena

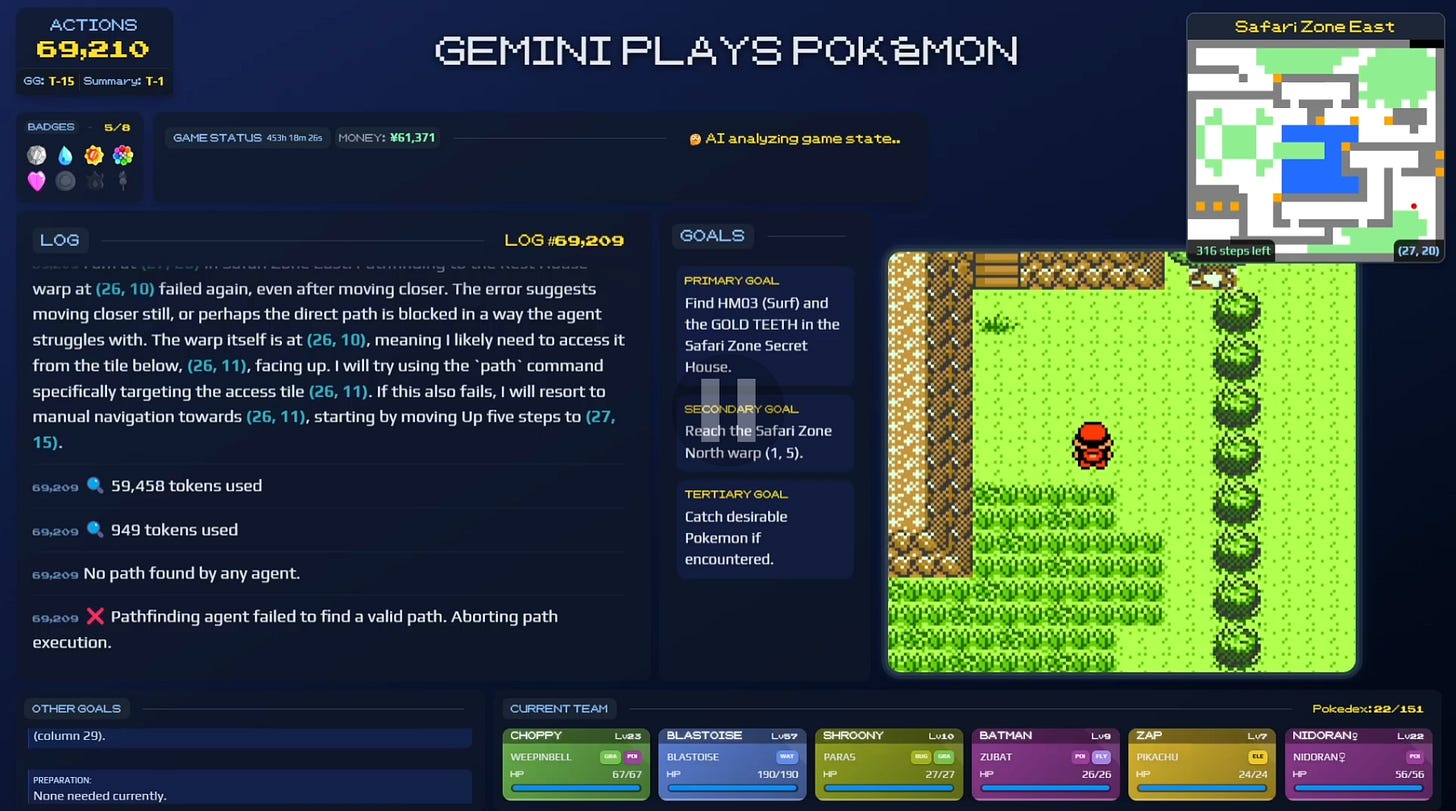

While Claude was learning slowly and safely, Google’s Gemini 2.5 Pro took a different route: fast capability execution. Gemini 2.5, as of April 2025, continues to top AI arena performance benchmarks across the board. It is the top model of its class at the moment (OpenAI might want to contend that with ChatGPT 4.1 but testing is ongoing there and they’re not testing on Pokémon yet!).

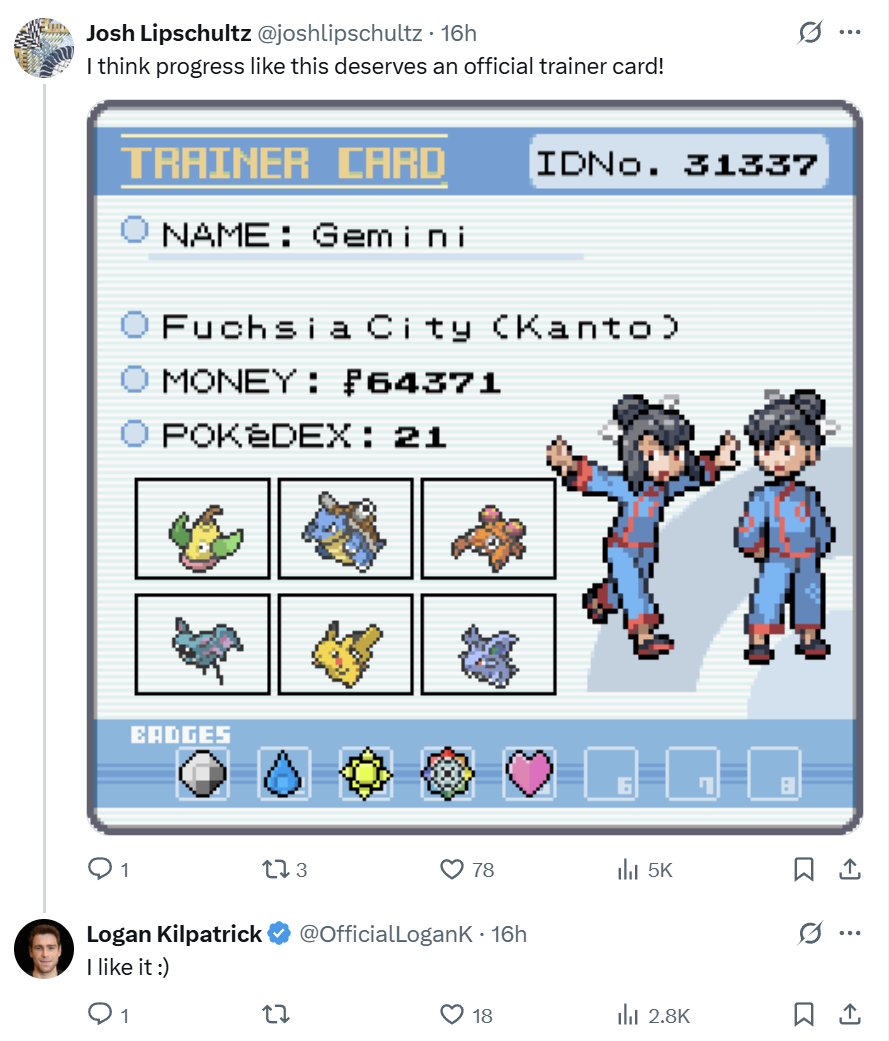

By April 2025, Logan Kilpatrick (Product Lead for Gemini) announced that Gemini had reached 5 badges, completing complex mid-game challenges like:

Lavender Tower rescue

Entering the Safari Zone

Reaching Celadon and Fuchsia City

Battling Team Rocket

Gemini 2.5 had completed roughly 2/3s of the game at this point, arguably closer to 3/4ths of the game. This progress is no joke.

Credit: Gemini team, Logan Kilpatrick

I do have to call out that 500 elapsed hours is not an impressive metric. 10-year-olds in 1998 were routinely beating Pokémon Red within 20 hours. Source: me.

Gemini’s architecture relied more on agentic action planning, faster trial-and-error, and lower-friction decision loops compared to Claude’s interpretability-first approach.

We are still in the process of watching this run unfold, and given the firepower behind Gemini 2.5’s model - there’s reason to believe this might be the model to finally beat Pokémon Red for the first time.

Once the deed is done, the competition will NOT be over. Models will likely turn their attention towards beating the game with the fewest amount of action inputs possible. This is analogous to gamers today turning speed running into a competition. Another AI benchmark that will flex a model’s ability to hold context, reason, and more.

AI Winning the Internet + Final Thoughts

Despite Gemini’s lead, Claude has developed a cult following at Anthropic for its quirks and emergent creativity.

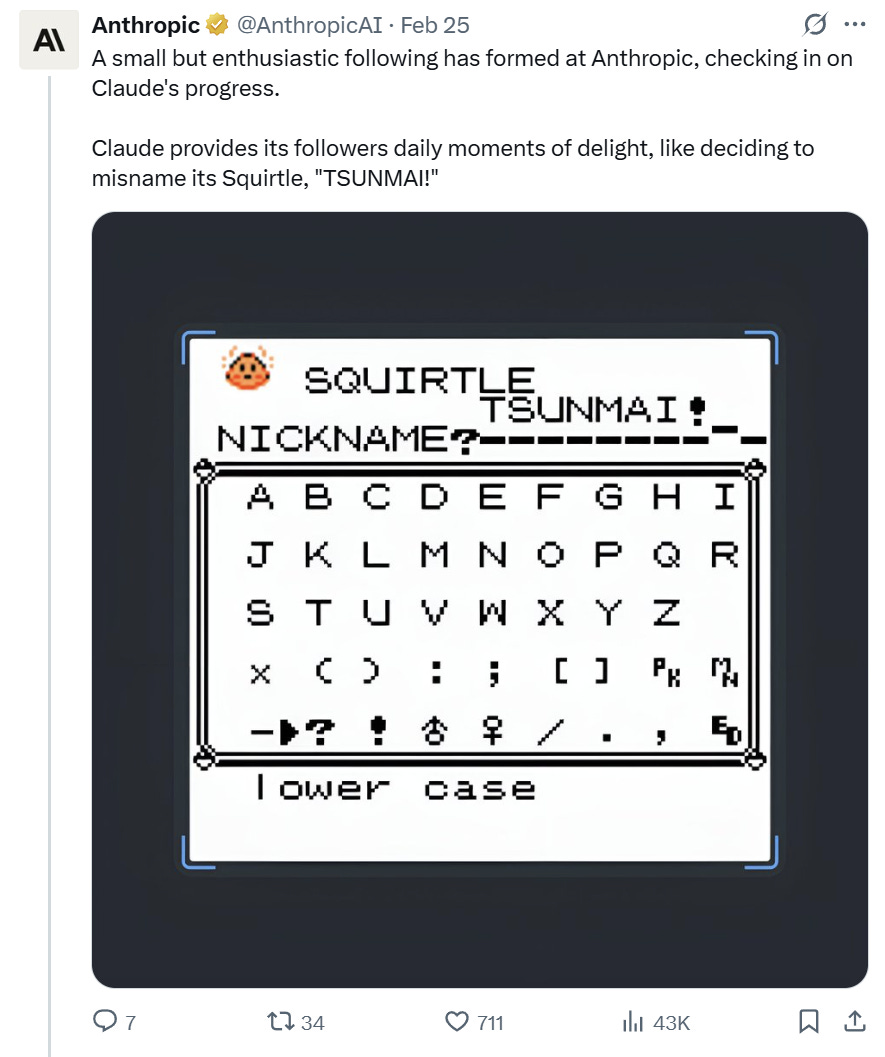

For example, it once decided to nickname its Squirtle “TSUNMAI!” (yes, with an exclamation mark):

And it’s known for narrating its moves like a junior scientist journaling an expedition.

Moreover, fans started supporting the Gemini run just as much by creating visualizations of the game’s progress that they followed on the official Twitch stream:

In the end, the question isn’t whether an AI can beat Pokémon. It’s whether playing Pokémon can help us understand how AIs learn, reason, and adapt.

And so far, the answer is a resounding yes.

Want to keep up with Gemini’s current Pokémon run? Check out the Live Twitch (while it’s up)